People who pass Microsoft DP-200 exams will get good technical jobs.In fact it has become a trend to finish the Designing and Implementing a Data Science Solution on Azure exams.Are you looking for a way to validate your DP-200 skills? https://www.pass4itsure.com/dp-200.html help you. Pass4itsure is the best online site for the Microsoft DP-200 exam preparation.If you want to pass your exams in first try with great marks.

DP-200 Exam, What is?

ExamBy:Microsoft

ExamCode:DP−200

ExamName:Implementing an Azure Data Solution

More microsoft exam questions please see here.

Candidates for DP−200 exam are Microsoft Azure data engineers who collaborate with business stakeholders to identify and meet the data requirements to implement data solutions that use Azure data services.

| Exam Format | 1. Scenario Yes / No – 9 questions 2.Multiple Choice – Single/Multi Answer, Drag Drop Order, and Hot Area – 30 questions 3. Labs done within a Virtual Machine – 12 tasks 4.Case Study – Multiple Choice – 8 questions |

| Exam Time | 210 minutes ! 30 minutes for NDA and feedback, which means you have 180 minutes to answer all your questions. |

Exam Format: (using the hyperlinks below)

| Firewall Rules & Virtual Networks. |

| On-Prem to Azure (Data Sync, DMS, etc). |

| Security & Compliance (Data Masking, TDE, Always Encrypted, Data Discovery, Threat Protection, Active Directory Integration, Auditing, etc). |

| Availability Capability (Backups, Geo-Replication, Failover Groups, etc). |

| Performance Monitoring (DMVs, Performance Recommendations, Query Performance Insight, Auto Tuning, Diagnostics Logging, etc). |

| Elastic Pools, Managed Instances, Purchase Models & Service Tiers (Hyperscale, etc). |

| Distributions, Partitioning, Resource Classes, Polybase, External Tables, CTAS, Performance Monitoring (DMVs), Authentication & Security, etc. |

| Cloud vs Edge, Inputs, Outputs, UDAs, UDFs, Window Functions, Stream vs Reference, Partitioning, Performance, Scaling. |

| Pipelines & Activities, Datasets, Linked Services, Integration Runtimes, Triggers, Authoring/Monitoring Experience. |

| Blobs vs ADLS Gen2, Data Redundancy, Security, Performance Tiers, Storage Lifecycle, Migration. |

| Keys, Secrets, Certs, and Access Policies. |

| Use Cases in Architecture, Terminology, Availability, Consistency, Scalability. |

| Sources, Topics, Subscriptions, Handlers, Use Cases in Architecture. |

| Use Cases in Architecture. |

| Use Cases for APIs, Consistency Levels, Distribution, Partitioning. |

| Use Cases in Architecture, Clusters, Secrets, Connecting to Data Sources, Performance Metrics. |

| Use Cases in Architecture, Clusters, Secrets, Connecting to Data Sources, Performance Metrics. |

| Application, Alerts, Log Analytics. |

How do I get better results in the Microsoft DP-200 exam?

To get better results from the Microsoft DP-200 exam. First, you need better preparation for the exam. You can get the best result from this exam.

It’s important to find a Microsoft learning path for Azure data engineers.The content is rich and varied, and learning will not become boring. For example, read, watch videos , answer questions, and get a good combination of hands-on experience in a sandbox environment.I will share it for free below:

Read

Read related resources, listed above.( Azure SQL Database ……)

Latest other Certification DP-200 dumps Practice test Questions and answers

Free Microsoft DP-200 Dumps | Real Exam Questions( From Pass4itsure)

QUESTION 1

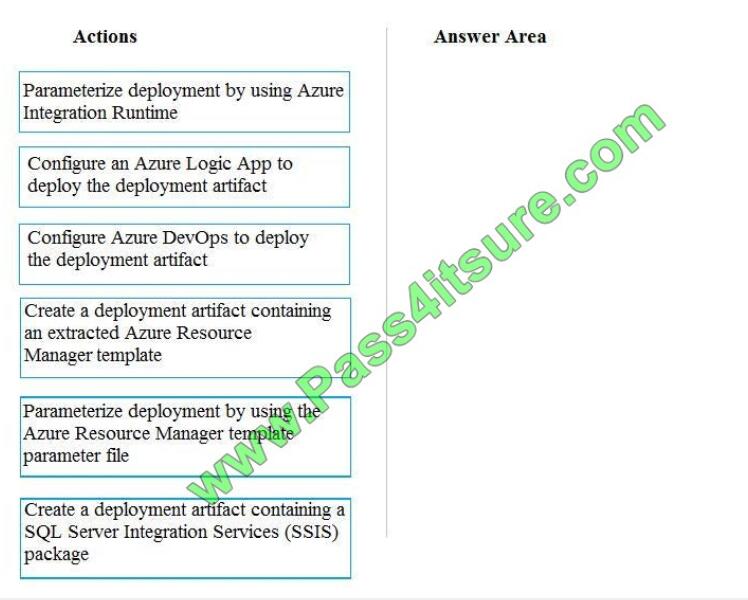

You need to ensure that phone-based polling data can be analyzed in the PollingData database.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer are and arrange them in the correct order.

Select and Place:

Correct Answer:

All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple

environments No credentials or secrets should be used during deployments

QUESTION 2

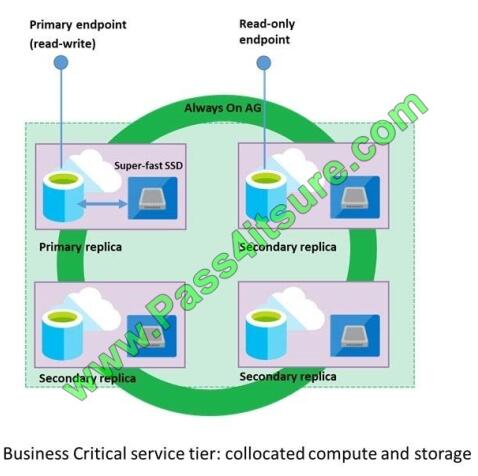

A company plans to use Azure SQL Database to support a mission-critical application.

The application must be highly available without performance degradation during maintenance windows.

You need to implement the solution.

Which three technologies should you implement? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Premium service tier

B. Virtual machine Scale Sets

C. Basic service tier

D. SQL Data Sync

E. Always On availability groups

F. Zone-redundant configuration

Correct Answer: AEF

A: Premium/business critical service tier model that is based on a cluster of database engine processes. This

architectural model relies on a fact that there is always a quorum of available database engine nodes and has minimal

performance impact on your workload even during maintenance activities.

E: In the premium model, Azure SQL database integrates compute and storage on the single node. High availability in

this architectural model is achieved by replication of compute (SQL Server Database Engine process) and storage

(locally attached SSD) deployed in 4-node cluster, using technology similar to SQL Server Always On Availability

Groups.

F: Zone redundant configuration By default, the quorum-set replicas for the local storage configurations are created in

the same datacenter. With the introduction of Azure Availability Zones, you have the ability to place the different replicas

in the quorum-sets to different availability zones in the same region. To eliminate a single point of failure, the control ring

is also duplicated across multiple zones as three gateway rings (GW).

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-high-availability

QUESTION 3

A company has a Microsoft Azure HDInsight solution that uses different cluster types to process and analyze data.

Operations are continuous.

Reports indicate slowdowns during a specific time window.

You need to determine a monitoring solution to track down the issue in the least amount of time.

What should you use?

A. Azure Log Analytics log search query

B. Ambari REST API

C. Azure Monitor Metrics

D. HDInsight .NET SDK

E. Azure Log Analytics alert rule query

Correct Answer: B

Ambari is the recommended tool for monitoring the health for any given HDInsight cluster.

Note: Azure HDInsight is a high-availability service that has redundant gateway nodes, head nodes, and ZooKeeper

nodes to keep your HDInsight clusters running smoothly. While this ensures that a single failure will not affect the

functionality of a cluster, you may still want to monitor cluster health so you are alerted when an issue does arise.

Monitoring cluster health refers to monitoring whether all nodes in your cluster and the components that run on them are

available and functioning correctly. Ambari is the recommended tool for monitoring utilization across the whole cluster.

The Ambari dashboard shows easily glanceable widgets that display metrics such as CPU, network, YARN memory,

and HDFS disk usage. The specific metrics shown depend on cluster type. The “Hosts” tab shows metrics for individual

nodes so you can ensure the load on your cluster is evenly distributed.

References: https://azure.microsoft.com/en-us/blog/monitoring-on-hdinsight-part-1-an-overview/

QUESTION 4

A company uses Azure SQL Database to store sales transaction data. Field sales employees need an offline copy of

the database that includes last year\\’s sales on their laptops when there is no internet connection available.

You need to create the offline export copy.

Which three options can you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Export to a BACPAC file by using Azure Cloud Shell, and save the file to an Azure storage account

B. Export to a BACPAC file by using SQL Server Management Studio. Save the file to an Azure storage account C. Export to a BACPAC file by using the Azure portal

D. Export to a BACPAC file by using Azure PowerShell and save the file locally

E. Export to a BACPAC file by using the SqlPackage utility

Correct Answer: BCE

You can export to a BACPAC file using the Azure portal.

You can export to a BACPAC file using SQL Server Management Studio (SSMS). The newest versions of SQL Server

Management Studio provide a wizard to export an Azure SQL database to a BACPAC file.

You can export to a BACPAC file using the SQLPackage utility.

Incorrect Answers:

D: You can export to a BACPAC file using PowerShell. Use the New-AzSqlDatabaseExport cmdlet to submit an export

database request to the Azure SQL Database service. Depending on the size of your database, the export operation

may take some time to complete. However, the file is not stored locally.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-export

QUESTION 5

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some questions sets might have more than one correct solution,

while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

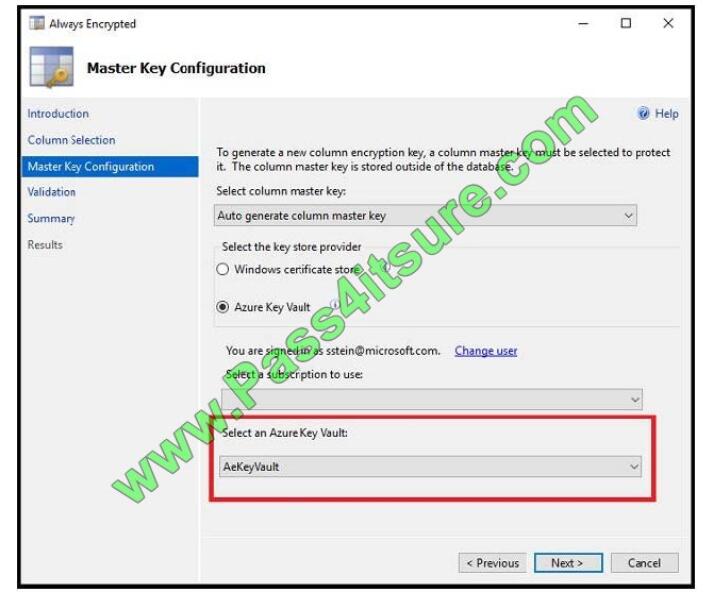

You need to configure data encryption for external applications.

Solution:

1.Access the Always Encrypted Wizard in SQL Server Management Studio

2.Select the column to be encrypted

3.Set the encryption type to Deterministic

4.Configure the master key to use the Windows Certificate Store

5.Validate configuration results and deploy the solution

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: B

Use the Azure Key Vault, not the Windows Certificate Store, to store the master key.

Note: The Master Key Configuration page is where you set up your CMK (Column Master Key) and select the key store

provider where the CMK will be stored. Currently, you can store a CMK in the Windows certificate store, Azure Key

Vault, or a hardware security module (HSM)

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-always-encrypted-azure-key-vault

QUESTION 6

A company has a SaaS solution that uses Azure SQL Database with elastic pools. The solution contains a dedicated

database for each customer organization. Customer organizations have peak usage at different periods during the

year.

You need to implement the Azure SQL Database elastic pool to minimize cost.

Which option or options should you configure?

A. Number of transactions only

B. eDTUs per database only

C. Number of databases only

D. CPU usage only

E. eDTUs and max data size

Correct Answer: E

The best size for a pool depends on the aggregate resources needed for all databases in the pool. This involves

determining the following:

Maximum resources utilized by all databases in the pool (either maximum DTUs or maximum vCores depending on your

choice of resourcing model).

Maximum storage bytes utilized by all databases in the pool.

Note: Elastic pools enable the developer to purchase resources for a pool shared by multiple databases to

accommodate unpredictable periods of usage by individual databases. You can configure resources for the pool based

either on the

DTU-based purchasing model or the vCore-based purchasing model.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool

QUESTION 7

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some questions sets might have more than one correct solution,

while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You need to implement diagnostic logging for Data Warehouse monitoring.

Which log should you use?

A. RequestSteps

B. DmsWorkers

C. SqlRequests

D. ExecRequests

Correct Answer: C

Scenario:

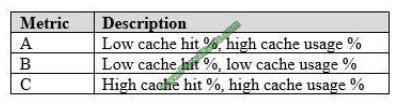

The Azure SQL Data Warehouse cache must be monitored when the database is being used.

QUESTION 8

You manage a solution that uses Azure HDInsight clusters.

You need to implement a solution to monitor cluster performance and status.

Which technology should you use?

A. Azure HDInsight .NET SDK

B. Azure HDInsight REST API

C. Ambari REST API

D. Azure Log Analytics

E. Ambari Web UI

Correct Answer: E

Ambari is the recommended tool for monitoring utilization across the whole cluster. The Ambari dashboard shows easily

glanceable widgets that display metrics such as CPU, network, YARN memory, and HDFS disk usage. The specific

metrics shown depend on cluster type. The “Hosts” tab shows metrics for individual nodes so you can ensure the load

on your cluster is evenly distributed.

The Apache Ambari project is aimed at making Hadoop management simpler by developing software for provisioning,

managing, and monitoring Apache Hadoop clusters. Ambari provides an intuitive, easy-to-use Hadoop management

web UI backed by its RESTful APIs.

References: https://azure.microsoft.com/en-us/blog/monitoring-on-hdinsight-part-1-an-overview/

https://ambari.apache.org/

QUESTION 9

Note: This question is part of series of questions that present the same scenario. Each question in the series contains a

unique solution. Determine whether the solution meets the stated goals.

You develop a data ingestion process that will import data to a Microsoft Azure SQL Data Warehouse. The data to be

ingested resides in parquet files stored in an Azure Data Lake Gen 2 storage account.

You need to load the data from the Azure Data Lake Gen 2 storage account into the Azure SQL Data Warehouse.

Solution:

1.Create an external data source pointing to the Azure storage account

2.Create a workload group using the Azure storage account name as the pool name

3.Load the data using the INSERT…SELECT statement Does the solution meet the goal?

A. Yes

B. No

Correct Answer: B

You need to create an external file format and external table using the external data source. You then load the data

using the CREATE TABLE AS SELECT statement.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-load-from-azure-data-lakestore

QUESTION 10

Note: This question is part of series of questions that present the same scenario. Each question in the series contains a

unique solution. Determine whether the solution meets the stated goals.

You develop data engineering solutions for a company.

A project requires the deployment of resources to Microsoft Azure for batch data processing on Azure HDInsight. Batch

processing will run daily and must:

Scale to minimize costs

Be monitored for cluster performance

You need to recommend a tool that will monitor clusters and provide information to suggest how to scale.

Solution: Monitor clusters by using Azure Log Analytics and HDInsight cluster management solutions.

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: A

HDInsight provides cluster-specific management solutions that you can add for Azure Monitor logs. Management

solutions add functionality to Azure Monitor logs, providing additional data and analysis tools. These solutions collect

important performance metrics from your HDInsight clusters and provide the tools to search the metrics. These solutions

also provide visualizations and dashboards for most cluster types supported in HDInsight. By using the metrics that you

collect with the solution, you can create custom monitoring rules and alerts.

References: https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-oms-log-analytics-tutorial

QUESTION 11

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some questions sets might have more than one correct solution,

while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You need to configure data encryption for external applications.

Solution:

1.Access the Always Encrypted Wizard in SQL Server Management Studio

2.Select the column to be encrypted

3.Set the encryption type to Deterministic

4.Configure the master key to use the Azure Key Vault

5.Validate configuration results and deploy the solution

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: A

We use the Azure Key Vault, not the Windows Certificate Store, to store the master key.

Note: The Master Key Configuration page is where you set up your CMK (Column Master Key) and select the key store

provider where the CMK will be stored. Currently, you can store a CMK in the Windows certificate store, Azure Key

Vault, or a hardware security module (HSM).

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-always-encrypted-azure-key-vault

QUESTION 12

A company plans to use Azure Storage for file storage purposes. Compliance rules require: A single storage account to

store all operations including reads, writes and deletes Retention of an on-premises copy of historical operations

You need to configure the storage account.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Configure the storage account to log read, write and delete operations for service type Blob

B. Use the AzCopy tool to download log data from $logs/blob

C. Configure the storage account to log read, write and delete operations for service-type table

D. Use the storage client to download log data from $logs/table

E. Configure the storage account to log read, write and delete operations for service type queue

Correct Answer: AB

Storage Logging logs request data in a set of blobs in a blob container named $logs in your storage account. This

container does not show up if you list all the blob containers in your account but you can see its contents if you access it

directly.

To view and analyze your log data, you should download the blobs that contain the log data you are interested in to a

local machine. Many storage-browsing tools enable you to download blobs from your storage account; you can also use

the Azure Storage team provided command-line Azure Copy Tool (AzCopy) to download your log data.

References: https://docs.microsoft.com/en-us/rest/api/storageservices/enabling-storage-logging-and-accessing-logdata

QUESTION 13

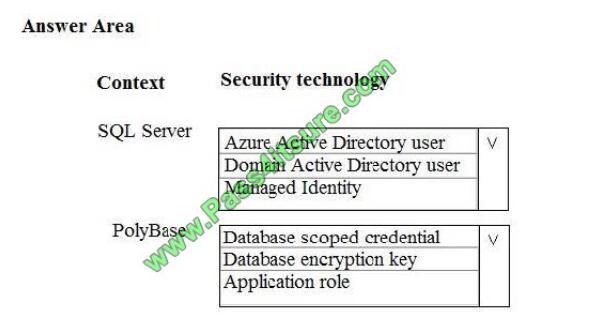

You need to ensure polling data security requirements are met.

Which security technologies should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: Azure Active Directory user

Scenario:

Access to polling data must set on a per-active directory user basis

Box 2: DataBase Scoped Credential

SQL Server uses a database scoped credential to access non-public Azure blob storage or Kerberos-secured Hadoop

clusters with PolyBase.

PolyBase cannot authenticate by using Azure AD authentication.

References:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-database-scoped-credential-transact-sql

[PDF] Free Microsoft DP-200 pdf dumps download from Google Drive: https://drive.google.com/open?id=1lJNE54_9AAyU9kzPI_8NR-PPFqNYM7ys

Tips: Exam DP-200 |My Experience

Compared to many other Azure certifications (such as 775, 776, AZ-300 or AZ-301), the DP-200 is found to be easier. I easily completed the test in 100 minutes and set aside enough time to re-check the content. I can’t reveal any problems, but I will provide you with directions for preparation.

Accurate and useful learning materials

If you have accurate and useful learning materials, you can get the best results from this exam.

Exam Questions

There are some good companies that offer exam questions related to the exams you are willing to take. For example, pass4itsure. They update product frequently so customer can always have the latest version of the brain DP-200 dumps. You can practice them. Most of them are accurate and are perfect for your exam preparation.

Don’t overthink!

Finally, don’t think too much. There is no “depending on” answer in the Microsoft certification exam world. Even if I wish I could add “yes, but if…” to many answers, then I can’t. You need to enter with the right answer or the best answer.

Choose Pass4itsure.com

Summarize:

While you are training you will want to find DP-200 practice exams. You can download the Microsoft DP-200 exam dumps from https://www.pass4itsure.com/dp-200.html,cover all of the Designing and Implementing a Data Science Solution on Azure test objectives.